NUI pathways to AI

NUI or Natural User Interfaces are designed to feel as natural as possible to the user. The goal of an NUI is to create seamless interaction between the human and machine, making the interface invisible to the user. A lofty goal, I know… but in 18 years of user experience design and usability testing, I can testify to the fact that some users will always be confused by the interface layer that lies between us and our information.

Death of the Mouse

In Jeff Han’s February 2006 TED talk: The radical promise of the multi-touch interface he used an eloquent phrase that I believe opened the NUI floodgates. He said: “It’s completely intuitive; there’s no instruction manual. The interface just kind of disappears…” and later… “This is exactly what you expect, especially if you haven’t interacted with a computer before.” At the beginning of his talk, he predicted that “…. (multi touch interface) is going to really change the way we interact with machines from this point on.” And he was right. In just a year’s time, Apple released the first iPhone making multitouch interfaces fully ubiquitous and accessible to mass consumers.

In the space of one year, people’s notion of what it means to use and work on computers and machines changed from point-and-click key commands to tap, scroll and multi-touch. Our once terrifying notion that content should have to live below the fold has changed forever.

The form factor of the handheld device has had another unrelated but equally positive impact on user interfaces in what’s today known as a “mobile first” mindset. The notion that advanced power computing feature-sets could be stripped from the mobile device (reserved for desktop/laptop computing). This essentially forced UI designers and developers to restrain themselves in favor of mobile elegance and essential features geared for the mobile context. The net effect has challenged UI designers and developers into a more critical mindset in constructing interfaces.

Introduction of Voice

In 2011, Apple introduced it’s mobile product consumers to Siri, a new paradigm of NUI bringing voice recognition and speech to text controls to the playing field. Though Siri and other voice interfaces have been with us for nearly 5 years, the adoption is yet to hit an inflection point. A study by Intelligent Voice essentially surmised that only 1 in 6 iPhone users actually use Siri on a regular basis.

Irrespective of the Intelligent Voice study, Conversational Interfaces are taking off. Amazon’s Alexa (disguised as a bluetooth speaker) has targeted the Smart Home marketplace as a stand-alone, yet extensible and ITTT vocal command station for the common household. The Vocal i/o movement isn’t going away… in fact, China’s is showing VUI’s moving towards a tipping point.

Andrew Ng, Baidu’s Chief Scientist, makes a similar statement to Jeff Han in an MIT article: “The best technology is often invisible, and as speech recognition becomes more reliable, I hope it will disappear into the background.” and Jim Glass, in the same article states that “Speech has reached a tipping point in our society,” he says. “In my experience, when people can talk to a device rather than via a remote control, they want to do that.

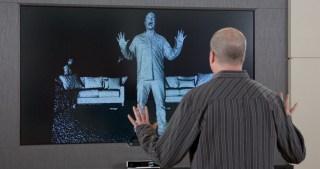

Creepy Motion Detection

The NUI journey doesn’t end with Voice Commands and Multi Touch interfaces. Running parallel to the mobile device NUI revolution, the X-Box Kinect’s motion detection, voice interface, while creepy when you think about it watching and listening, has been in households since 2010. The obvious disadvantage being it’s home entertainment center tether hasn’t stopped the creative minds of the OpenNI (natural interfaces) movement from running with non-set-top oriented applications for the device. (note:Apple‘s 2013 purchase of PrimeSense, a founding member of OpenNI,… a sign of more NUI to come.)

Jedi Mind Tricks

Gesture based controls add a new dimension to NUI… most take the accelerometer sensor in the iPhone for granted, unless playing with Sphero, Ollie or the Parrot Drone, you may not realize the ease and natural responsiveness of the accelerometer, now outfitted in the Apple TV remote.

The NOD, Leap Motion, and MYO are stretching the application of gesture based NUI. I was fortunate to attend a demo at the Longmont TinkerMillwhere one of the members showed a 3D printed prothetic being controlled by EKG sensors (https://twitter.com/LiminaUX/status/727679681529413634) This was nearly direct control of the prosthetic from the users neurological intent. This totally blew my mind.

There are a host of NUI’s layered into Augmented and Virtual reality devices, the front-running Google Glass, Oculus, and Microsoft’s (unfortunately named) Hololens are collapsing as much of the interface as possible.

The Birth of HAL

The final component to NUI on it’s path to laying a foundation for Artificial Intelligence, currently running in the background is Machine Learning. The deeper, and more regularly we interact with computers in our natural manner, pattern recognition algorithms are in place laying a foundation for the most natural human computer interaction of all, true AI.

While Microsoft’s Tay flopped on Twitter falling prey the legions of internet trolls… IBM’s Watson is getting ready to change everything… it’s time to start getting ready for a new age of human computer interaction in the realm of NUI and AI. What will this mean for you? How will you apply NUI models into your next gen software?